Synthesizing data for ETL and Application Testing

Apart from the comfort provided through technological innovation, it has also provided the ability to generate a huge amount of data. We currently can generate data more than we have ever done. We’ve generated approximately 17.5 quintillion bytes of data every week and this continues to get bigger. This now brings about the issue of handling this enormous amount of data, maintenance, and storage. This is carried out effectively through ETL, also known as Extract, Transform, and Load methodology.

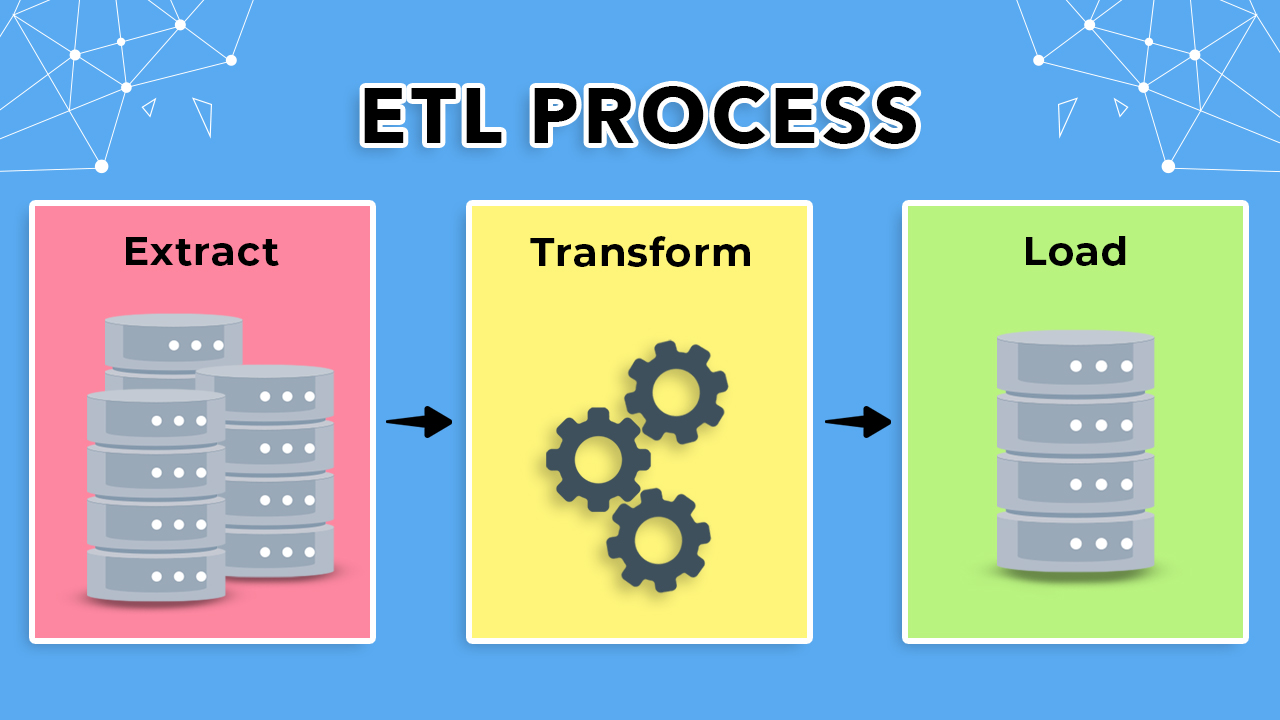

Concept of ETL

Data are typically useless until they have gone through processing and are used to determine a conclusion. This pattern is being utilized to develop processes and solutions for clients. ETL tools, as a necessity, feature mechanisms of data profiling, analyzing, data management, cleansing, and many other integrations. According to its definition, ETL is a standard procedure of extracting data from different sources which standardizes or modifies them and eventually stores them at the required end destination. This develops a data repository by the storage of quintessential data in an organized manner.

Extraction

The initial step in ETL is to extract. The stored data in different platforms from various sources in multiple formats is collected. This step guarantees that the data that’s collected from the various sources are extracted very quickly without affecting the retrieval and performance of the source system. Data can be extracted using different methods.

Logical

- Incremental Extraction

- The modifications integrated into the source system are regularly monitored and only the modification based on the timestamps is extracted.

- Full Extraction

- The full source data extraction occurs here.

Physical

- Online extraction

- Here, the source system is utilized directly for the extraction process.

- Offline extraction

- An explicit process of extraction occurs from the source system.

Transform

The collected data during the extraction process requires to be transformed into the most ideal standardized version, which makes data classified and consumable. A specific set of guidelines is applicable in data validation. These guidelines follow business guidelines using the relevant conversion metrics. Together with this, a data mapping process is also available.

Business guidelines maintain data quality for the relevance of the business with the relevant use case and policies.

- Hierarchical rules

- Each rule is determined by the previous rule.

- Autonomous rules

- Each rule possesses an independent run instance.

- Data filtration and cleansing to remove the error, and promote consistency and usability before data transfer.

- Data import using delimiting formats

- Categorizing columns into different subcategories using atomic values.

- Uniform format and case

- Adequate and appropriate format parsing mechanism before merging

- Carry out error checks by checking sources for corrupt data

- Process standardization and accuracy validation

- Eliminate blank spaces and formatting.

- Eliminate data duplication.

- Include missing data and metadata for improving the final quality.

- Analyzing and training the team is proceeding with the standardized protocol for maintaining clean data.

Load

The end process concludes with data migration to the target source using fewer resources and downtime. Numerous constraints created in the schema are handled.

“About 80% of the time is spent on data cleansing and 20% in data analysis by data scientists.”

Most times, ETL is the major operation in data maintenance for a business. These data collections are utilized for improved decision-making and initiatives. Trending reports can be generated. The reusability of data can also be enforced, which helps to save a huge amount of cost and time. It also increases the level of productivity for professionals who no longer have to write generic scripts and can get the needed technical skills through the use of intelligent ETL tools.

ETL Testing

ETL Testing is carried out to ascertain data accuracy that was transferred from the source system to the main target. There are five steps required for this process.

- Identification of the source

- Data procurement, schema, data mapping, and analysis of metadata

- Applying business protocols and validating the utilized architecture.

- Logging errors, populating data, and handling the mechanism.

- Preparation of test data and generating the report.

Application Testing

Application testing guarantees that there are no glitches in the software. Over 52% of teams don’t perform in-depth application testing as a result of the absence of sufficient time. However, excellent application testing guarantees a higher ROI and quality control. This process requires five steps which are:

- Test case design by the specifications.

- Writing automated testing scripts and manual testing, test cases.

- Execution of the validated tests.

- Application performance tuning after testing of the load.

- Bug tracking and generating a report

Application testing is performed for desktop, mobile, and web applications. Application testing is important before launch and final deployment. These tests guarantee that the application performs well in different web browsers and operating systems (desktop and mobile). These tests are effective in offering values and meeting the needs and requirements of customers. This can also generate higher ROI alongside high customer retention. The improved customer experience also enhances the reputation of the brand, which makes the business a leader in the industry.